ETL in AWS

Overview – ETL in AWS

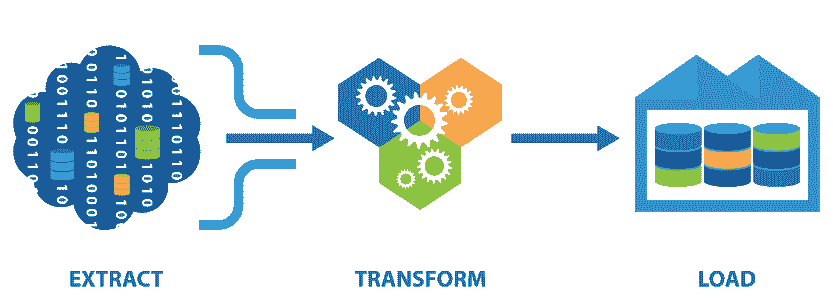

AWS (Amazon Web Services) ETL (Extract, Transform, Load) is a process of extracting data from various sources, transforming it into a format that can be loaded into a target database or system, and loading it into that target system. This process is commonly used to move data from one system to another, such as from a legacy system to a cloud-based system, or from multiple systems into a data warehouse for analysis.

AWS offers a number of services that can be used for ETL, including:

- AWS Glue:

- This is a fully managed ETL service that makes it easy to extract, transform, and load data. It can automatically discover data and create a catalog of data sources, and it can also generate code for performing transformations and loading data.

- AWS Data Pipeline:

- This is a web service that helps to move and process data across AWS and on-premises data sources. It provides a simple way to create, schedule, and manage data-driven workflows.

- Amazon S3:

- This is a highly scalable, object storage service that can be used to store and retrieve data. It can be used as a source or target for ETL processes, and it can also be used to store data for use with other AWS services such as Glue and Data Pipeline.

- Amazon Redshift:

- This is a fully managed, petabyte-scale data warehouse service. It can be used as a target for ETL processes, and it can also be used to analyze data using SQL.

- Amazon Kinesis:

- This is a real-time data streaming service that can be used to collect, process, and analyze data in real-time. It can be used as a source for ETL processes, and it can also be used to stream data to other AWS services such as Redshift and S3.

When using AWS for ETL, it is important to consider the specific requirements of the data and the target system. AWS Glue, for example, is well-suited for data cataloging and code generation, but may not be the best choice for real-time data streaming. AWS Data Pipeline, on the other hand, is well-suited for data-driven workflows, but may not be the best choice for complex transformations.

In addition, when using AWS for ETL, it is important to consider security and compliance requirements. AWS provides a number of security and compliance features, such as encryption and access controls, that can be used to protect data during ETL processes.

Overall, AWS offers a wide range of services that can be used for ETL, and can provide a flexible and scalable solution for moving and processing data. By carefully considering the specific requirements of the data and the target system, and by making use of the security and compliance features provided by AWS, organizations can effectively use AWS for ETL and gain insights from their data.

AWS Support for ETL

ETL (Extract, Transform, Load) is a process that is commonly used to move data from one system to another. AWS (Amazon Web Services) offers a number of services that can be used for ETL, including AWS Glue, AWS Data Pipeline, Amazon S3, Amazon Redshift, and Amazon Kinesis. Each of these services can be used to extract data from various sources, transform it into a format that can be loaded into a target database or system, and load it into that target system. In this article, we will take a closer look at how ETL works in AWS and the various services that are available for ETL in AWS.

The first step in ETL is to extract data from various sources. AWS Glue is a fully managed ETL service that makes it easy to extract data from a variety of sources, including relational databases, data lakes, and SaaS applications. Glue can automatically discover data and create a catalog of data sources, which can then be used to extract data from those sources. Additionally, Glue can also generate code for performing transformations and loading data, which can save time and effort when working with ETL.

Once data is extracted, the next step is to transform it into a format that can be loaded into a target system. AWS Data Pipeline is a web service that helps to move and process data across AWS and on-premises data sources. It provides a simple way to create, schedule, and manage data-driven workflows, which can be used to transform data into the desired format. Additionally, data can be transformed in Amazon Redshift, which is a fully managed, petabyte-scale data warehouse service. It can be used to analyze data using SQL and transform it into the desired format.

The final step in ETL is to load the data into the target system. Amazon S3 is a highly scalable, object storage service that can be used to store and retrieve data. It can be used as a source or target for ETL processes, and it can also be used to store data for use with other AWS services such as Glue and Data Pipeline. Additionally, data can be loaded into Amazon Redshift, which can be used as a target for ETL processes, and it can also be used to analyze data using SQL.

Amazon Kinesis is a real-time data streaming service that can be used to collect, process, and analyze data in real-time. It can be used as a source for ETL processes, and it can also be used to stream data to other AWS services such as Redshift and S3. This service is useful when ETL process needs to be done in real-time.

When using AWS for ETL, it is important to consider the specific requirements of the data and the target system. AWS Glue, for example, is well-suited for data cataloging and code generation, but may not be the best choice for real-time data streaming. AWS Data Pipeline, on the other hand, is well-suited for data-driven workflows, but may not be the best choice for complex transformations.

In addition to this, security and compliance are very important when working with ETL in AWS. AWS provides a number of security and compliance features, such as encryption and access controls, that can be used to protect data during ETL processes.

In conclusion, AWS offers a wide range of services that can be used for ETL, and can provide a flexible and scalable solution for moving and processing data. By carefully considering the specific requirements of the data and the target system, and by making use of the security and compliance features provided by AWS, organizations can effectively use AWS for ETL

ETL tools available on AWS

AWS (Amazon Web Services) offers a wide range of tools that can be used for ETL (Extract, Transform, Load) processes. These tools can be used to extract data from various sources, transform it into a format that can be loaded into a target database or system, and load it into that target system. In this article, we will take a closer look at the different AWS tools that are available for ETL and how they can be used to effectively move and process data.

- AWS Glue:

- This is a fully managed ETL service that makes it easy to extract, transform, and load data. It can automatically discover data and create a catalog of data sources, and it can also generate code for performing transformations and loading data. Glue also integrates with other AWS services such as Amazon S3, Amazon Redshift, and Amazon RDS, making it easy to move and process data across these services.

- AWS Data Pipeline:

- This is a web service that helps to move and process data across AWS and on-premises data sources. It provides a simple way to create, schedule, and manage data-driven workflows, and it can be used to move data between Amazon S3, Amazon Redshift, and other data stores.

- Amazon S3:

- This is a highly scalable, object storage service that can be used to store and retrieve data. It can be used as a source or target for ETL processes, and it can also be used to store data for use with other AWS services such as Glue and Data Pipeline.

- Amazon Redshift:

- This is a fully managed, petabyte-scale data warehouse service. It can be used as a target for ETL processes, and it can also be used to analyze data using SQL.

- Amazon Kinesis:

- This is a real-time data streaming service that can be used to collect, process, and analyze data in real-time. It can be used as a source for ETL processes, and it can also be used to stream data to other AWS services such as Redshift and S3.

- Amazon EMR:

- AWS Lake Formation:

- This is a fully managed service that makes it easy to set up, secure, and manage a data lake. It integrates with other AWS services such as Glue, Data Pipeline, and Redshift, and can be used to create a centralized repository for storing and analyzing data.

- AWS DMS:

- This is a fully managed service that can be used to migrate data between databases, data warehouses, and other data stores. It can be used to move data between on-premises and AWS data stores, and it can also be used to replicate data between data stores for disaster recovery and business continuity.

When using these AWS tools for ETL, it is important to consider the specific requirements of the data and the target system. AWS Glue, for example, is well-suited for data cataloging and code generation, but may not be the best choice for real-time data streaming. AWS Data Pipeline, on the other hand, is well-suited for data-driven workflows, but may not be the best choice for complex transformations. Additionally, it’s always important to consider security and compliance requirements when working with ETL in AWS.